Imagine the colossal hum. The vast, intricate dance of data and silicon, trying to conjure intelligence. Yet, there it is, this curious inefficiency, a silence in the machine. Billions poured into infrastructure, into those powerful GPU clusters, only to find them performing at a disheartening fraction of their potential.

Thirty to fifty-five percent. Think of it. All that investment, all that earnest effort, then the pause. The lag. A link falters, and an entire training job, meant to birth something profound, simply… stops. A staggering $2.25 billion in unused capacity. What a thing, to build such magnificence, only to see so much of its promise dissipate into the air.

Then, Clockwork steps in, with FleetIQ. A different kind of orchestration.

Not more raw power, but smarter handling of what already exists. A Software-Driven Fabric, they call it. Like a meticulously woven tapestry, not of threads, but of code, guiding the torrent. It's about transforming what's currently idle, silent, into active, productive intelligence. Uber, accelerating incident detection.

DCAI, witnessing swifter AI training, better cluster efficiency. Nebius, with its improved Mean Time Between Failures, a quiet triumph in large-scale distributed systems. These are not abstract promises. These are tangible shifts, in real operations. The beauty of seeing the current bottleneck—that delicate, vital communication between GPUs—addressed not by brute force, but by finesse.

This isn't just a technical upgrade; it's an unlocking.

A reassertion of value. As AI moves from the quiet labs to the bustling production floors, these hindrances become defining challenges. The industry's quiet nod, the funding round closing at four times its previous valuation, tells its own story. Lip-Bu Tan, John Chambers, voices of experience. Their backing speaks to ▩▧▦ capital; it's a recognition of a pivotal shift.

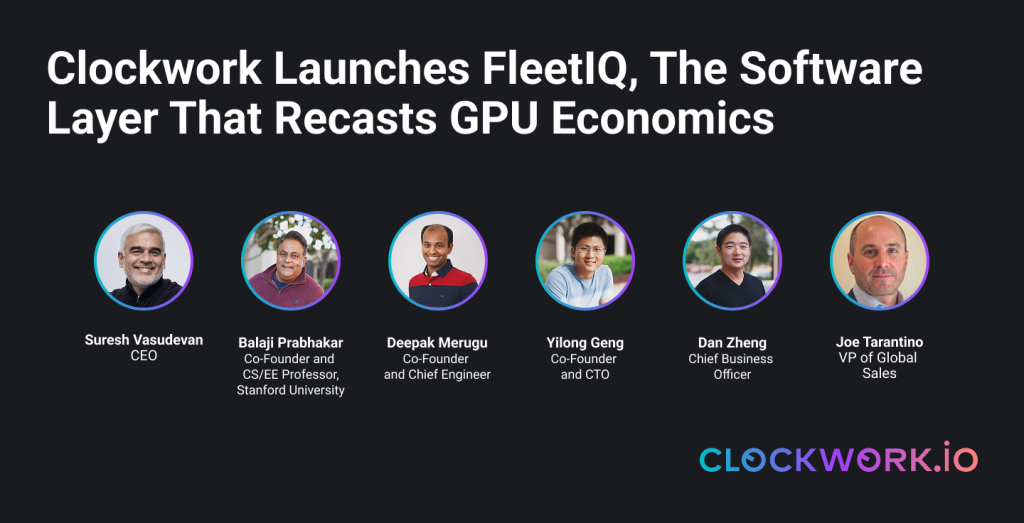

A leadership change, Suresh Vasudevan at the helm, Joe Tarantino connecting it to the world. A gentle recalibration, perhaps, making the vast, complex machinery of modern intelligence, simply... work better. More reliably. More equitably, in its use of resources.

To be clear, the pursuit of artificial intelligence computing optimization is a complex and multifaceted endeavor, driven by the need for faster, more efficient, and more effective processing of vast amounts of data. The current landscape of AI computing is characterized by a reliance on specialized hardware, such as graphics processing units (GPUs) and tensor processing units (TPUs), which are designed to accelerate specific types of computations.

However, as AI models continue to grow in size and complexity, there is a pressing need for more sophisticated optimization techniques that can unlock the full potential of these hardware architectures.

One key area of focus in AI computing optimization is the development of novel data processing architectures, such as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs), which can be tailored to specific AI workloads.

These custom-built architectures have the potential to significantly outperform traditional computing architectures, such as central processing units (CPUs), in terms of speed and power efficiency.

Researchers are also exploring new approaches to data compression, encoding, and quantization, which can help reduce the computational overhead of AI models and enable more efficient processing.

The optimization of AI computing is a rapidly evolving field, with significant implications for a wide range of industries, from healthcare and finance to transportation and ← →

Looking to read more like this: See hereUber accelerates incident detection, DCAI speeds up AI training and cluster efficiency, and Nebius improves MTBF in large-scale distributed AI ...• • • •

No comments:

Post a Comment